Token Bucket Algorithm

In our previous blog API Rate Limiting 101, we delved into the world of rate limiting and explored various rate-limiting algorithms. Today, we are going to take a closer look at one specific algorithm: the Token Bucket Algorithm. The token bucket algorithm is a classic approach to rate limiting.

What is the Token Bucket Algorithm?

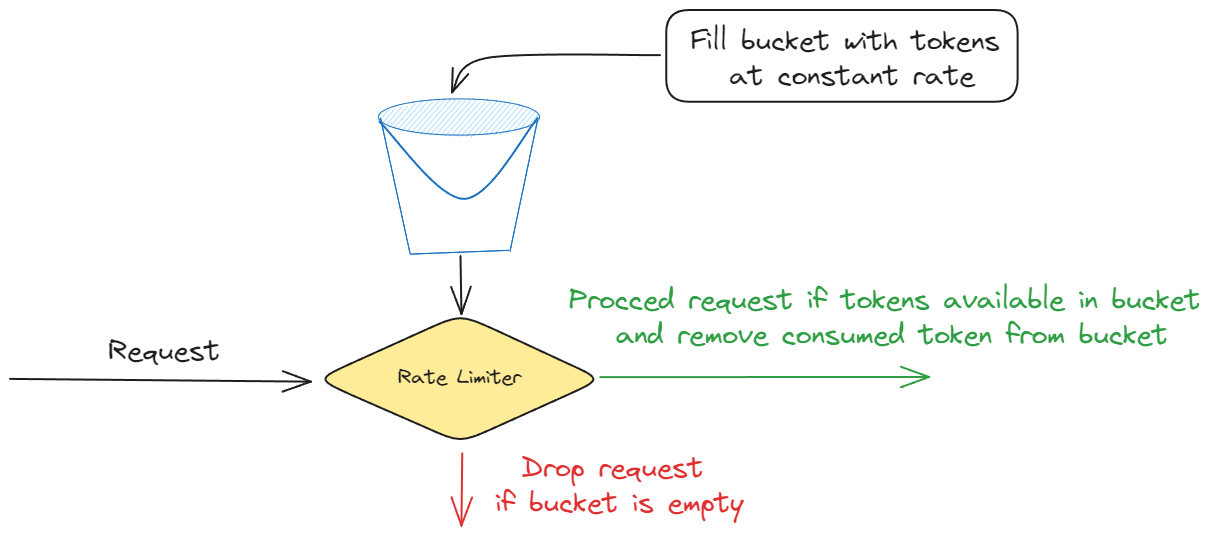

The Token Bucket Algorithm is a simple yet effective technique used to control the rate at which requests are made to a system or server.

Picture it as an actual bucket that gets filled with tokens over time. Each token represents permission to make one request. When a client wants to make an API request, it must possess a token from the bucket. If tokens are available, the request is granted, and a token is consumed. If the bucket is empty, the request is denied until more tokens are added over time.

Let's break down how this algorithm works in a straightforward manner:

Step 1: Create a Bucket

Imagine you have a bucket with a fixed capacity, which we'll call the "rate limit." This bucket can hold a certain number of tokens. In our example, we'll set a rate limit of 10 tokens.

Step 2: Refill the Bucket

The bucket isn't static; it periodically gets refilled with tokens. This is a crucial aspect of the Token Bucket Algorithm. Tokens are added to the bucket at a fixed rate. For instance, in our code, the bucket gets refilled every 2 seconds.

Step 3: Incoming Requests

When a request comes in, we check if there are any tokens in the bucket.

Step 4: Consume Tokens

If there are tokens in the bucket, we "consume" one token from it. This means the request is allowed to proceed. We also keep track of when the token was consumed.

Step 5: Empty Bucket

If the bucket is empty (no tokens available), we reject the request. This helps in preventing overloading of the server or system, ensuring that it operates within its defined limits.

Implementing the Token Bucket Algorithm:

Now, let's take a look at some code that implements the Token Bucket Algorithm using Node.js and the ExpressJS framework.

| import { CronJob } from 'cron'; | |

| import express from 'express'; | |

| const app = express(); | |

| app.use(express.json()); | |

| const PORT = process.env.PORT || 5000; | |

| const RATE_LIMIT = 10; | |

| const tokenBucket = []; | |

| // Function to refill the bucket | |

| const refillBucket = () => { | |

| if (tokenBucket.length < RATE_LIMIT) { | |

| tokenBucket.push(Date.now()); | |

| } | |

| }; | |

| // API endpoint to check the bucket's status | |

| app.get('/bucket', (req, res) => { | |

| res.json({ | |

| bucketLimit: RATE_LIMIT, | |

| currentBucketSize: tokenBucket.length, | |

| bucket: tokenBucket | |

| }); | |

| }); | |

| // Middleware for rate limiting | |

| const rateLimitMiddleware = (req, res, next) => { | |

| if (tokenBucket.length > 0) { | |

| const token = tokenBucket.shift(); | |

| console.log(`Token ${token} is consumed`); | |

| res.set('X-RateLimit-Remaining', tokenBucket.length); | |

| next(); | |

| } | |

| else | |

| { | |

| res.status(429).set('X-RateLimit-Remaining', 0).set('Retry-After', 2).json({ | |

| success: false, | |

| message: 'Too many requests' | |

| }); | |

| } | |

| }; | |

| app.use(rateLimitMiddleware); | |

| // Sample endpoint for testing rate limiting | |

| app.get('/test', (req, res) => { | |

| const ROCK_PAPER_SCISSORS = ['rock 🪨', 'paper 📃', 'scissors ✂️']; | |

| const randomIndex = Math.floor(Math.random() * 3); | |

| const randomChoice = ROCK_PAPER_SCISSORS[randomIndex]; | |

| res.json({ | |

| success: true, | |

| message: `You got ${randomChoice}` | |

| }); | |

| }); | |

| // Cron job to periodically refill the bucket | |

| const job = new CronJob('*/2 * * * * *', () => { | |

| refillBucket(); | |

| }); | |

| app.listen(PORT, () => { | |

| console.log(`Server running on port ${PORT}`); | |

| job.start(); | |

| }); |

We set up our Express server and defined the rate limit (RATE_LIMIT) as 10 requests per second.

The

refillBucketfunction adds tokens to the bucket if it's not full.We've created an endpoint

/bucketto check the current state of the bucket.The

rateLimitMiddlewaremiddleware checks if tokens are available in the bucket. If yes, it consumes one and allows the request to continue. If not, it returns a 429 (Too Many Requests) status.There's a test endpoint

/testjust for fun, which simulates serving requests.

In conclusion, the Token Bucket Algorithm is a valuable tool in the world of rate limiting, offering a straightforward yet effective way to control the flow of requests to a system or server.

Happy coding, and may your buckets always be filled with tokens! 🚀🪣